Have you seen YouTube ads recently for a “slippage bot” that uses ChatGPT and promises to help you earn passive income with crypto? It’s a scam. And it’s ripping off a lot of people, all while using unsuspecting actors hired on Fiverr who don’t understand what they’ve been hired to read. One of the actors tells Gizmodo he ultimately didn’t even get paid for his work, despite his face showing up constantly on YouTube under accounts he doesn’t control.

The videos all follow the same basic script. They open with an actor saying they personally created a bot using ChatGPT that allows anyone to earn money without any real coding knowledge. Early in the videos, the actors warn that the crypto community has lots of scammers, so it’s important that the viewer not interact with “unfamiliar wallets” and “unknown exchanges.”

After getting the ironic warning about scammers out of the way, the script then dives into where you can copy code that can be pasted into a website that will supposedly execute a so-called front run on crypto transactions. You’ll have to link up your crypto wallet, of course, for the entire thing to work. The underlying idea, also known as a sandwich attack, is a real thing done by unethical crypto traders to extract money. But that’s not what will happen if you try to execute the code in the video.

If you actually just breeze through the code, there’s nothing inherently suspicious about it, aside from the promise of free money. But that’s because the real wallet address has been hidden inside by splitting it up into pieces. Anyone who connects their Metamask wallet and runs the code simply sends their crypto to one of many wallets controlled by the anonymous scammers.

The videos all follow the same basic script with minor changes and the only real difference between many of the videos is the wording about how much you’ll supposedly make with their technique. Sometimes the titles and thumbnails promise $2,000 a day or $3,000 per day, while others list the amounts in ethereum or percentages. But anyone who follows the video’s instructions is going to make precisely zero dollars, and instead send their crypto to scammers.

Gizmodo has yet to find evidence that anyone who’s appearing in these videos knows they’re participating in a scam. In fact, three of the people we’ve talked to were all hired on Fiverr for video and voice-acting work. And it’s pretty clear why these scammers are hiring real actors. By laying down a few hundred dollars at a gig-work site like Fiverr, (one actor told us he got $500), these scammers get real people to be the face of their scam without ever revealing their own identities while raking in the real money.

As you can see in the short video compilation we’ve compiled below, the script being read is identical across hundreds of videos on YouTube.

The videos have been an enormous headache for some of the actors involved because there appears to be an army of new YouTube accounts posting the videos every day. The actors have no control over how the videos are being used, and even if they’re able to get some taken down, they’re reappearing constantly on other accounts.

“I’ve been contacted by internet security professionals, OSINT enthusiasts, […] one victim, AND multiple people in my real life relationships that have had the video hit their feeds,” one of the actors, Scott Panfil, told Gizmodo by email on Sunday.

Panfil, a 41-year old music teacher in New York, went on to say that at least four “actual friends” have reached out to him since seeing the videos in their YouTube ads. He contacts YouTube every time he finds a new video to get it taken down but it’s quite a game of whack-a-mole.

Panfil reached out to Fiverr to ask about the account that hired him and was told it was terminated. But he says Fiverr insisted it couldn’t do anything beyond that. A spokesperson for Fiverr told Gizmodo in an email Tuesday the company has blocked the accounts brought to their attention and are going to provide Panfil with some kind of compensation after he wasn’t paid.

“Any attempt to defraud or scam others is in clear violation of our terms of service and strictly prohibited. It is against our Community Standards to allow anyone to use services offered through Fiverr to promote intentionally misleading information or fraud, or that can pose financial risks for our users,” the Fiverr spokesperson said.

Many of the scammy videos are unlisted, meaning they don’t show up in regular YouTube and Google search results. But they frequently appear as paid ads, as you can see below in a screenshot Gizmodo took on March 23. That video on the far right featuring Panfil is “sponsored,” indicated just below the title that it’s being promoted through YouTube’s ad program.

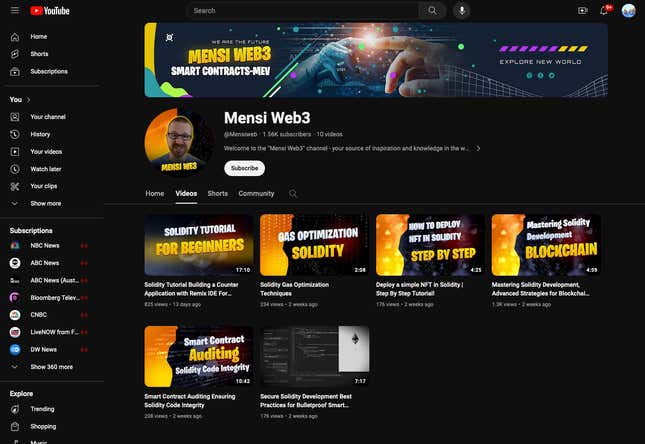

Anyone who clicks to view the supposed creator’s other videos will see just five or six created recently. Those videos tend to be generic explainers on technical topics like NFTs and smart contracts, making it appear as though the person who ostensibly created this YouTube page knows what they’re talking about when it comes to crypto.

But the person that’s featured on each YouTube account has no control over the content that appears there, as you can see in an account using Panfil’s face below. These people were just hired for a single video on Fiverr and their videos are being reused repeatedly.

Most of the tutorials acting as a smokescreen aren’t even narrated. They’re boring and act as nothing more than cover for the paid ads that aren’t even visible to a basic search on YouTube. Again, it should be stressed that the people who have appeared in these ads probably have no idea they’re promoting a scam. And their videos are being used across several accounts.

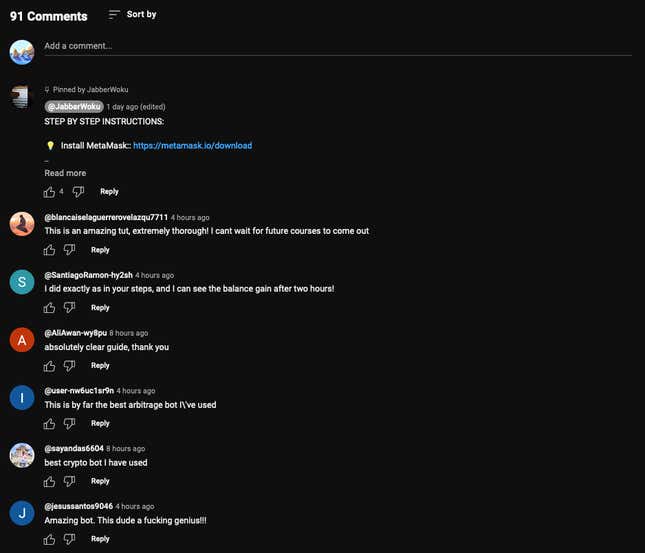

The other common element in all of these YouTube videos is that the comments are overwhelmingly positive, clearly a result of some bot network trying to lend the scams credibility.

Oddly enough, Gizmodo first learned about this scam about a month ago while combing through consumer complaints filed with the FTC that mention ChatGPT. We obtained the complaints through the Freedom of Information Act, and one complaint, which was filed on Dec. 27, 2023, really caught our eye:

I found a video from YouTube “etsy web3 dev,” YouTuber video called “I used ChatGPT arbitrage Trading Bot to make $1,248 per day – passive income.”

I followed instructions to deposit 0.5 Ethereum from my Metamask crypto wallet to an Etherscan contract that I was instructed to create. I did create the contract then used the compiler in ChatGPT to generate profit from my 0.5 ETH that I sent from my Metamask account. Nothing was found in my wallet and YouTuber could not respond my message. I tried again with 0.65 ETH the following day, but still nothing showed in my contract. I suspect the YouTuber to take in a way my Ethereum through the instructions are crooked to steal my money that are now worth $2,500 total.

The name of the person who filed the complaint was redacted by the FTC, which is standard practice when the agency releases documents through FOIA, so we were unable to reach out to that person directly. But having the name of a scammy video led us down a rabbit hole where we found hundreds of other videos with similar titles, all promising easy passive income. They were all using the same script and being read by real people.

Ever since we started looking into this scam, the YouTube videos have become even more common, as others on social media have reported seeing them frequently in recent days. YouTube spokesperson Javier Hernandez says the platform has “strict policies in place to protect the YouTube community” and six channels have been terminated for scams and other deceptive practices.

“We are also in the process of reviewing the ads in question and will take the appropriate action on those that violate our ads policies,” Hernandez said via email.

Most of the videos have real actors, but there are some where we never see a person appear aside from the thumbnail. And while almost all of the videos we found containing this scam featured male actors, there were some that featured female voice actors but they rarely had visual representation of an actual woman in the video, aside from some thumbnails.

How much are these scammers making? That part is hard to figure out precisely, but Gizmodo messaged one victim who said he lost 1 ETH, which is about $3,300 at the current price. Based on anecdotal reports on various crypto forums the amount being pulled in by these scammers is almost certainly reaching into the hundreds of thousands and beyond.

There are a handful of video explainers trying to warn people about the scam. But they haven’t gotten much attention. This video, for example, has just 75,000 views at the time of this writing despite being up for almost a year. Disturbingly, some of the videos claiming to debunk the scam are actually just trying to push their own version of the scam by debunking other videos and claiming they have a real bot that can generate this kind of passive income.

Needless to say, you should be wary of anyone promising a way to make easy money. And if you’re an actor looking to do some work through Fiverr, make sure you understand what you’re reading. Because even if you get paid, you could be confronted with some major frustrations down the line if it winds up being a scam.

Credit: Source link